Feature scaling

1. What is feature scaling?

Feature scaling is a method used to normalize all independent values of our data. We also call it as data normalization and is generally performed before running machine learning algorithms.

2. Why do we need to use feature scaling?

In practice the range of raw data is very wide and hence the object functions will not work properly (means that it will stuck at local optimum), or they are time-consuming without normalization.

For example: K-Means, might give you totally different solutions depending on the preprocessing methods that you used. This is because an affine transformation implies a change in the metric space: the Euclidean distance between two samples will be different after that transformation. When we apply gradient descent, feature scaling also helps it to converge much faster that without normalized data.

3. Methods in feature scaling?

Rescaling

The simplest method is rescaling the range of features to scale the range in [0, 1] or [−1, 1]. Selecting the target range depends on the nature of the data. The general formula is given as:

Source:

1. https://en.wikipedia.org/wiki/Feature_scaling

2. http://stackoverflow.com/questions/26225344/why-feature-scaling

Feature scaling is a method used to normalize all independent values of our data. We also call it as data normalization and is generally performed before running machine learning algorithms.

2. Why do we need to use feature scaling?

In practice the range of raw data is very wide and hence the object functions will not work properly (means that it will stuck at local optimum), or they are time-consuming without normalization.

For example: K-Means, might give you totally different solutions depending on the preprocessing methods that you used. This is because an affine transformation implies a change in the metric space: the Euclidean distance between two samples will be different after that transformation. When we apply gradient descent, feature scaling also helps it to converge much faster that without normalized data.

With and without feature scaling in gradient descent

3. Methods in feature scaling?

Rescaling

The simplest method is rescaling the range of features to scale the range in [0, 1] or [−1, 1]. Selecting the target range depends on the nature of the data. The general formula is given as:

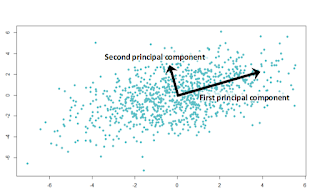

Standardization

Feature standardization makes the values of each feature in the data have zero-mean (when subtracting the mean in the enumerator) and unit-variance.

where  is the original feature vector,

is the original feature vector,  is the mean of that feature vector, and

is the mean of that feature vector, and  is its standard deviation.

is its standard deviation.

is the original feature vector,

is the original feature vector,  is the mean of that feature vector, and

is the mean of that feature vector, and  is its standard deviation.

is its standard deviation.

When do we use feature scaling?

We use it when the concept of distance is used by an algorithm, or in case that we want to speed up our optimization algorithms. For example: SVM, neural network.

When do we not to do it?

We do not do it when the range of features is very unclear, that is we do not know the max and min value. Moreover, the concept of distance is not used. For example: multilayer perceptions are a linear combination of the input data multiplied with weight. The scale will be accounted and scaled based on the weights.

1. https://en.wikipedia.org/wiki/Feature_scaling

2. http://stackoverflow.com/questions/26225344/why-feature-scaling

Comments

Post a Comment